After another year of red, amber and green lists, maybe it’s time for a more positive kind of list.

For a while now, I’ve realized that I find myself spending a bit too much time on Reddit. I suppose it’s at least somewhat productive as I spend most of my time on r/machinelearning, r/datascience and r/dataisbeautiful, but I’ve been wanting to do more proper scientific reading so I decided to come up with a list of some papers, books and summaries to read this year.

To-read list

Bayesian Statistics and Modelling1

It’s been some time since I’ve worked with any Bayesian statistics. Unfortunately it tends to be quicker to prototype and develop non-Bayesian statistical and ML models, and development speed has been critical during my last nine months working at fast-paced start-up.

Particularly as I hope to learn more about Bayesian ML and statistics concepts such as probabilistic graphical models, Gaussian processes, variational inference and more, I think I’ll need a quick refresher on the core Bayesian principles and workflow. This primer was recommended to me by a friend, and just by skimming through it, it seems to be very well written and makes great use of visual aids to help explain concepts.

Also, Prof. Ruth King is a co-author on this primer, and she was a fantastic tutor when I was studying the Bayesian Theory course at the School of Mathematics, University of Edinburgh.

Bayesian Modeling and Computation in Python2

This one is a real New Years’ gift — Osvaldo Martin, Ravin Kumar and Junpeng Lao made this book freely available online just before the New Year!

One of the main reasons that this book is on my to-read list is that the authors are major contributors to some of the largest Bayesian computation packages in Python, such as PyMC3 and Tensorflow Probability. The book also has many code examples using the above packages.

While Python is my preferred language for scientific programming, I am only familiar with libraries for Bayesian computation in R, such as JAGS for MCMC simulation. I have been wanting to explore PyMC3 for a long time, and I feel that this book would be a great introduction.

The Beginning of The Monte Carlo Method3

Monte Carlo simulation is such a simple yet fascinating method, but the fact that MCMC originated due to the development of the first nuclear weapons in the 1940s makes the Monte Carlo method even more intriguing.

This short historical summary was actually a recommended reading that I never got around to looking at, for a course during my master’s studies. Another good reason to read it is that it was written by Nicholas Metropolis, who chose to name the method Monte Carlo, and is the co-inventor of the commonly-used Metropolis–Hastings algorithm for MCMC sampling.

Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning4

As part of one of my master’s projects, I explored Bayesian neural networks for uncertainty quantification, particularly on the task of time series anomaly detection.

As there was quite a tight time limit on this project, I avoided following a fully Bayesian approach to the problem (e.g. prior elicitation, MCMC sampling & diagnostics, sensitivity analysis, prior/posterior predictive checking). Instead, I came across this paper which proposes the idea that dropout used on a neural network during evaluation can be used to approximate uncertainty in a way equivalent to a Gaussian process (GP).

In modern frameworks such as PyTorch, this has a quick and simple implementation:

- Enable dropout during evaluation.

- For a single input \(x_i\), generate \(N\) random samples \(\hat{y}_i^{(1)},\ldots,\hat{y}_i^{(N)}\) by passing \(x\) through the network \(N\) times.

- Use the confidence interval of these \(N\) samples as an uncertainty measure.

While this was quite straightforward to do for my project, I would like to learn more about why exactly this is a Bayesian approximation, and how it relates to GPs — a whole topic that should probably have its own to-read list.

Combining Character and Word Information in Neural Machine Translation Using a Multi-Level Attention5

This paper by Chen et al. investigates an interesting approach to machine translation which combines word and character-level information, rather than the typical approach of just attending to certain words during translation.

Interestingly, I came across this paper when thinking about approaches to the separate problem of diacritization.

- Diacritics

- Small markings placed on letters to indicate a particular pronunciation of a character.

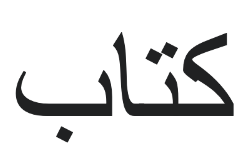

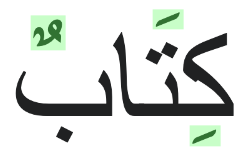

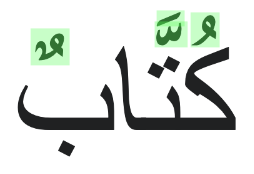

Below is an example of how diacritics (in green) can be placed above or below characters to produce different pronunciations in Arabic.

| English | - | Book | Writers |

|---|---|---|---|

| Arabic |  |

|

|

| Phonetic | ktāb | kitābun | kuttābun |

In everyday use of written Arabic, as well as other languages such as Hebrew, diacritics are often omitted to make writing easier. However, this can also make reading somewhat ambiguous in many cases. Using the above example, writing كتاب without diacritics could refer to either ‘book’ or ‘writers’.

By taking surrounding characters and words into context, proficient Arabic readers are often able to correctly restore these diacritics mentally when reading a sentence. In a similar manner, a neural diacritization system could use character and word-level attention to accurately restore diacritics, as explored by AlKhamissi et al.6

The Data Warehouse Toolkit (3rd Edition)7

Often listed as a fundamental read for anyone interested in data engineering, this book by Ralph Kimball & Margy Ross goes into detail about dimensional modelling, which is a critical process in data warehouse design.

Kimball is the originator of both data warehousing and the dimensional modelling process.

As the original edition is now quite dated, I’ve been recommended to read the latest edition as it is more up to date with some modern technologies used in data engineering.

The Evolving Role of the Data Engineer8

This is a more recent book about data engineering, taking a look at how data engineering processes and technology stacks have changed in recent years.

So far, this is the only book on this to-read list that I have began reading, and I would highly recommend it as an introductory data engineering book.

As I have lately been doing quite a bit of data science at my current job, I have also started to realize that the majority of my time goes into trying to manage and improve the way data is stored and prepared for the actual analysis and machine learning work. This has made me start to really appreciate the responsibilities of data engineers, and I aim to learn more about these processes and how they work on an a large scale.

Designing Data Intensive Applications 9

I am interested in reading this for the same reasons as The Evolving Role of the Data Engineer, but this book focuses more on applying data engineering principles and technologies to real-world scenarios such as building a large-scale web application or other network service.

Checklist

- Bayesian Statistics and Modelling

- Bayesian Modeling and Computation in Python

- The Beginning of The Monte Carlo Method

- Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning

- Combining Character and Word Information in Neural Machine Translation Using a Multi-Level Attention

- The Data Warehouse Toolkit

- The Evolving Role of the Data Engineer

- Designing Data Intensive Applications

-

Rens van de Schoot et al. “Bayesian statistics and modelling”, Nat Rev Methods Primers 1 (2021) ↩

-

Osvaldo A. Martin, Ravin Kumar, Junpeng Lao. “Bayesian Modeling and Computation in Python”, Online Copy, 1st Ed. (2021) ↩

-

Nicholas Metropolis. “The Beginning of the Monte Carlo Method”, Los Alamos Science Special Issue (1987), Vol. 15, pp. 125–130 ↩

-

Yarin Gal & Zoubin Ghahramani. “Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning”, Proceedings of the 33rd International Conference on International Conference on Machine Learning (2016), Vol. 48, pp. 1050–1059 ↩

-

Chen et al. “Combining Character and Word Information in Neural Machine Translation Using a Multi-Level Attention”, Proceedings of NAACL-HLT (2018), pp. 1284–1293 ↩

-

Badr AlKhamissi, Muhammad El-Nokrashy, Mohamed Gabr. “Deep Diacritization: Efficient Hierarchical Recurrence for Improved Arabic Diacritization”, Workshop on Arabic Natural Language Processing (2020) ↩

-

Ralph Kimball, Margy Ross. “The Data Warehouse Toolkit”, 3rd Ed. (2013) ↩

-

Andy Oram. “The Evolving Role of the Data Engineer”, (2020) ↩

-

Martin Kleppmann. “Designing Data-Intensive Applications”, (2017) ↩